enabled with FSR 4 technology

I’m pretty sure we’ll have a separate corpo-English by 2100 that is not intelligible by normal people.

The only reason I opened the article was to find out what FSR meant. They never actually spell it out, you can understand it’s AI upscaling from the context but I guess they just assume you know the acronym…

It stands for FidelityFX Super Resolution.

You know what would gather even more interest? Games not running like shit on native resolution.

That’s “brute-force rendering”, no one do that anymore old fart.

I find it amusing that the company that is promoting brute force calculation of ray trajectories rather than using optimised code (competition defeat device) calls native rendering “brute force”. Meanwhile some of the best games of the past decade run on potato powered chips.

I can’t get over this bullshit, Nvidia could become the best company in the world, with the best products at the best prices but I’ll never forgive this level of bullshit, fucking brute-force rendering.

Absolutely. Jensen is so rich, if he wanted to spend his fortune, he couldn’t, within the habitual human lifespan.

All that success because in the late naughties and early 10s NVIDIA (at least in EU) were giving away free GPUs to universities and giving grants on the condition researchers would use CUDA. Same with developers, they had two engineering teams in East Europe that would serve as outsoucing for code to cheapen development of games as a way to promote NVIDIA’s software “optimisations”. Most TWIMTBP games of that era, Bryan Rizzo’s time, have some sort of competing HW defeat device. They were so successful that their modern GPUs, Blackwell, can barely run some of their old games…

Nvidia creates problem then creates solution and charges a premium for it. Industry smells money and starts including said problem in games. AMD gets left behind and tries to play catch up. Offers open source implementations of certain technologies to try and also create solution. Gamers still buy Nvidia.

None of these two are our friends, though AMD is much nicer to the open source world. I tend to buy AMD because at least the hardware i’ve bought has good value and tremendous linux support.

I probably will, yeah.

Or I was going to. Would’ve got 5070 ti, but didn’t have luck with the stock when it came out then drank most of the money, thought to give it a bit of time.

I’m gonna wait a few months to see how this turns out after 5060ti comes out and whatnot.

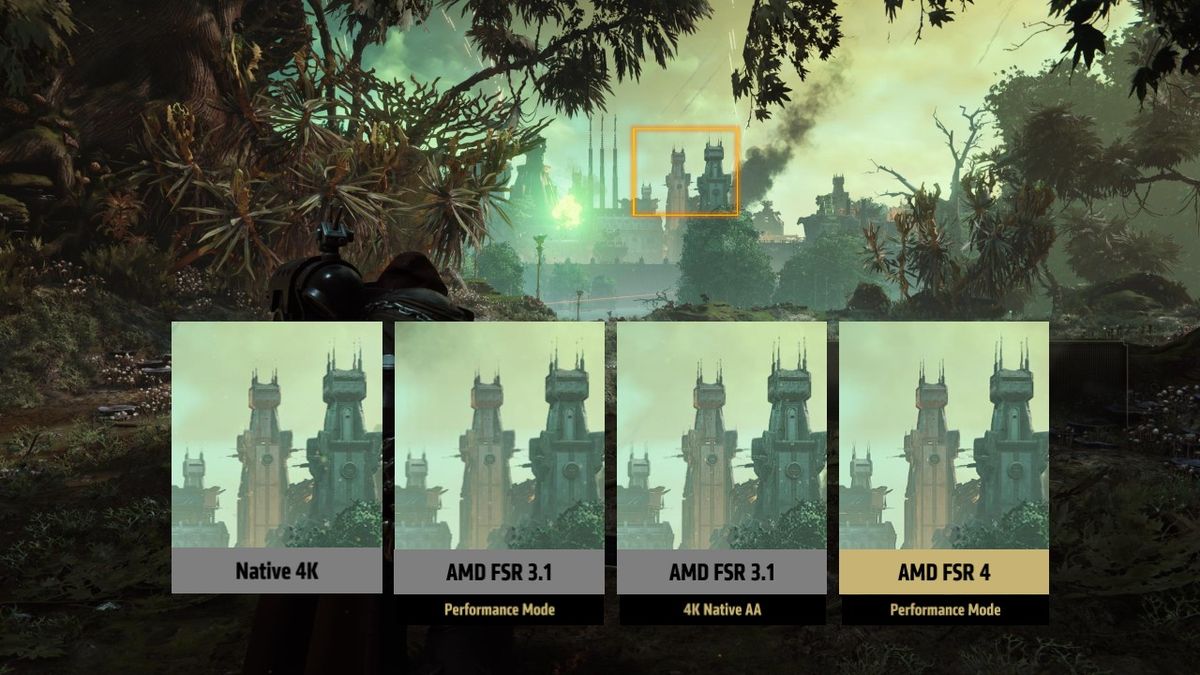

Good. FSR is finally able to compete with DLSS.

Is it? I haven’t used an Nvidia GPU since the GTX series, but my understanding was that DLSS was very effective. Meanwhile, the artifacting on FSR bothers the crap out of me.

Yes. FSR4 is the first version that uses dedicated hardware to do it like DLSS. Consensus seems to be that’s it’s on the same level as DLSS 3 (CDN model) but is heavier to run, which is pretty great for a first attempt.

I see, it’s unfortunate that it requires dedicated hardware, but I guess it makes sense when the main competitor already has that.

Lol a fully dedicated tech for things we absolutely won’t notice.

FSR4 is absolutely noticable. I can’t tell the difference between native 4k and 1440p scaled to 4k with FSR4. That’s a giant performance boost.

I’ve even been having trouble telling the difference between Super Resolution 4 and native. Driver level upscaling this good is a game changer, I might not even have to deal with optiscaler.

I’d just like to see games optimized better so FSR/DLSS isn’t needed.

AMD is fairly very aware of this

PCGamer needs to edit this stuff.