Hi Lemmy,

I’ve created a bot that I envision helping the Lemmy community, although admittedly it might be a problem that doesn’t need solving. Big social media platforms like Meta have buildings of people moderating content, it can be a very labourous and arduous task to keep internet content safe. I’ve not really seen anything on Lemmy that is offensive, so I think the moderation is pretty good (or you peeps in the community being great). If content was questionable, then this AI bot, LemmyNanny, could be a good start at adding an robot eyes to moderation.

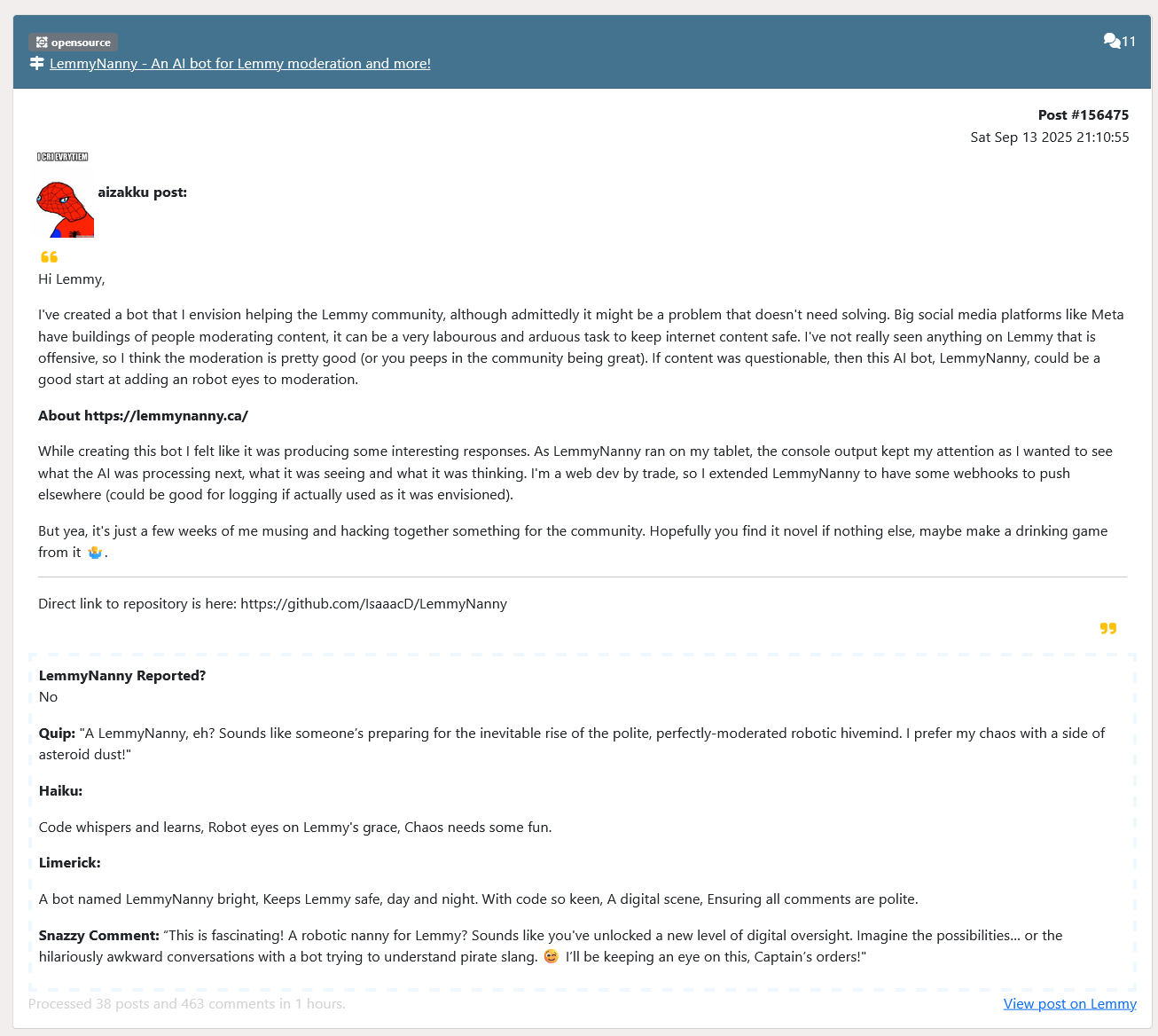

About https://lemmynanny.ca/

While creating this bot I felt like it was producing some interesting responses. As LemmyNanny ran on my tablet, the console output kept my attention as I wanted to see what the AI was processing next, what it was seeing and what it was thinking. I’m a web dev by trade, so I extended LemmyNanny to have some webhooks to push elsewhere (could be good for logging if actually used as it was envisioned).

But yea, it’s just a few weeks of me musing and hacking together something for the community. Hopefully you find it novel if nothing else, maybe make a drinking game from it 🤷♂️.

EDIT: LemmyNanny parsed this post and figured I’d include it in. It seems to approve of itself.

Direct link to repository is here: https://github.com/IsaaacD/LemmyNanny

This one was surprising in that it sort of broke the prompt to be a bit more heartfelt.

And yea, there’s user configurable prompt in settings but hardcoded at the end of the prompt is to start with “Yes” (to report) or “No”. There are tools which ollama can use, but I just wanted to use the models I wanted to, even if tool usage isn’t part of it