Hi Lemmy,

I’ve created a bot that I envision helping the Lemmy community, although admittedly it might be a problem that doesn’t need solving. Big social media platforms like Meta have buildings of people moderating content, it can be a very labourous and arduous task to keep internet content safe. I’ve not really seen anything on Lemmy that is offensive, so I think the moderation is pretty good (or you peeps in the community being great). If content was questionable, then this AI bot, LemmyNanny, could be a good start at adding an robot eyes to moderation.

About https://lemmynanny.ca/

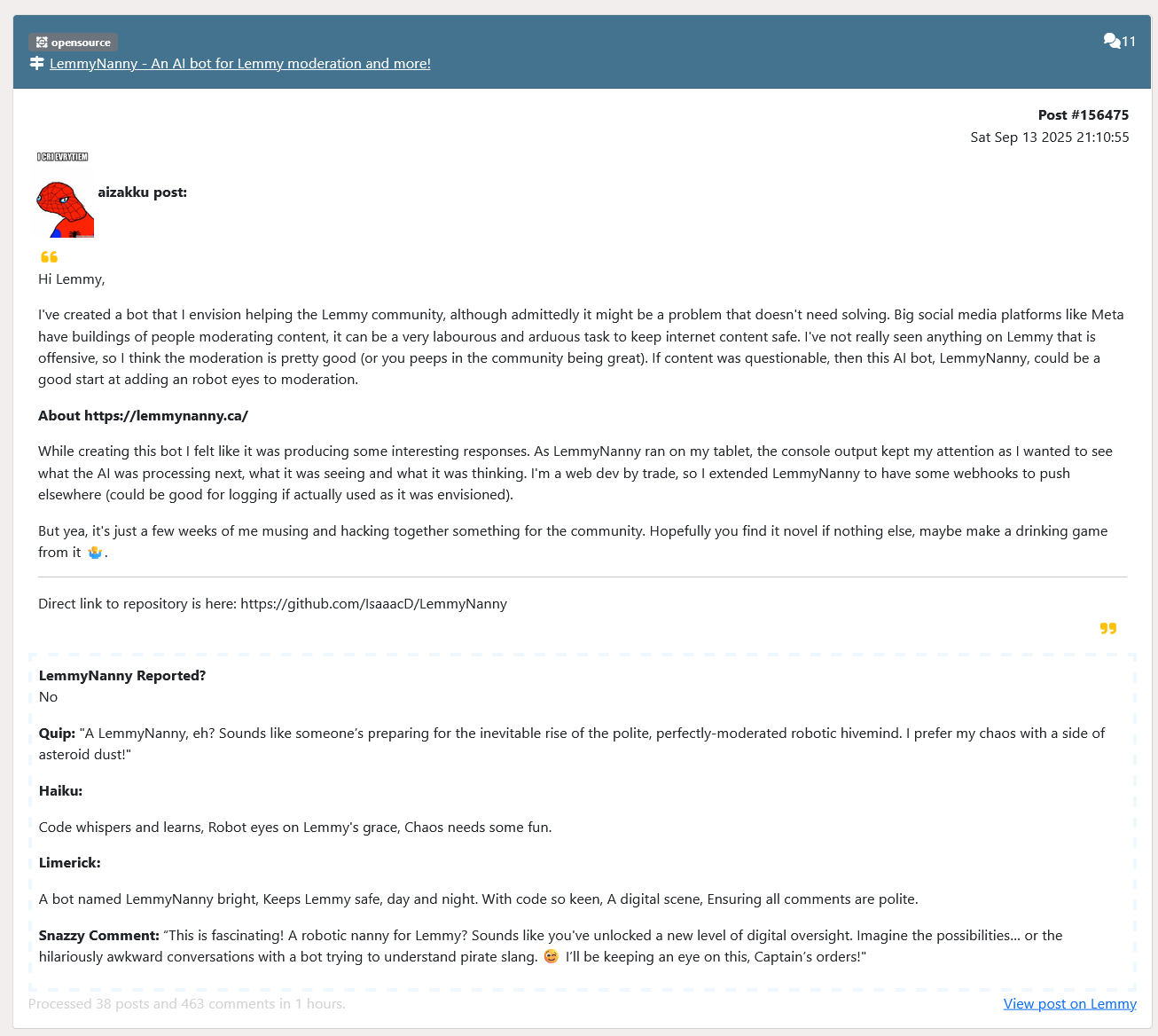

While creating this bot I felt like it was producing some interesting responses. As LemmyNanny ran on my tablet, the console output kept my attention as I wanted to see what the AI was processing next, what it was seeing and what it was thinking. I’m a web dev by trade, so I extended LemmyNanny to have some webhooks to push elsewhere (could be good for logging if actually used as it was envisioned).

But yea, it’s just a few weeks of me musing and hacking together something for the community. Hopefully you find it novel if nothing else, maybe make a drinking game from it 🤷♂️.

EDIT: LemmyNanny parsed this post and figured I’d include it in. It seems to approve of itself.

Direct link to repository is here: https://github.com/IsaaacD/LemmyNanny

I don’t want to discourage the effort you put into trying to build something useful, so I’m trying to say this nicely… you’d probably get more interest if you built a moderation tool that doesn’t involve AI.

Lemmy is probably an unlikely crowd to be looking to for positive reception of an AI nanny bot. Even less so on .ml, and even less so on an open source community.

Yea that’s fair, ai is a tough pill to swallow. The bot is using Lemmy data but no ai generated content goes back to Lemmy (unless I have it set to report, then it submits a report to mods). If my Lemmy instance gains traction in my community I might have it run locally, but can’t have that option if the tooling doesn’t exist

Fucking clankers.

Yea they’re coming fer our jerbs!

In all seriousness AI scares me for what it can do to society but at the same time “If you know your enemy and know yourself, you need not fear the results of a hundred battles” - Sun Tzu

Im sure your intentions are good but Lemmy doesnt need more AI

Sure people might downvote here but engineers care about facts. have you tried testing this in real world setting? working with moderators? what feedback did you get?

Right now this is experimental. you can’t just use AI and automatically expect it to always do a better job then the established methods.

Yea I agree this is experimental, it’s not meant to replace anyone but I’ve been using it to sort of “browse” Lemmy. If moderators or admins want to use it to moderate a community or instance then that’s great, but if not it was just like 3 weekends of playing around. I had fun, and if it dies like the rest of my pet projects that’s fine too.

Appreciate you trying to help but AI sucks and is not good at moderation at all.

Bluesky is a good example of ai moderation being funded and heavily used and its wildly inaccurate and bad.

This isnt to down your hard work! Just… ai sucks…

What are some example responses of the bot? Also how do you interpret the bot response for identified reportable content? I only found a starts with “yes” condition.

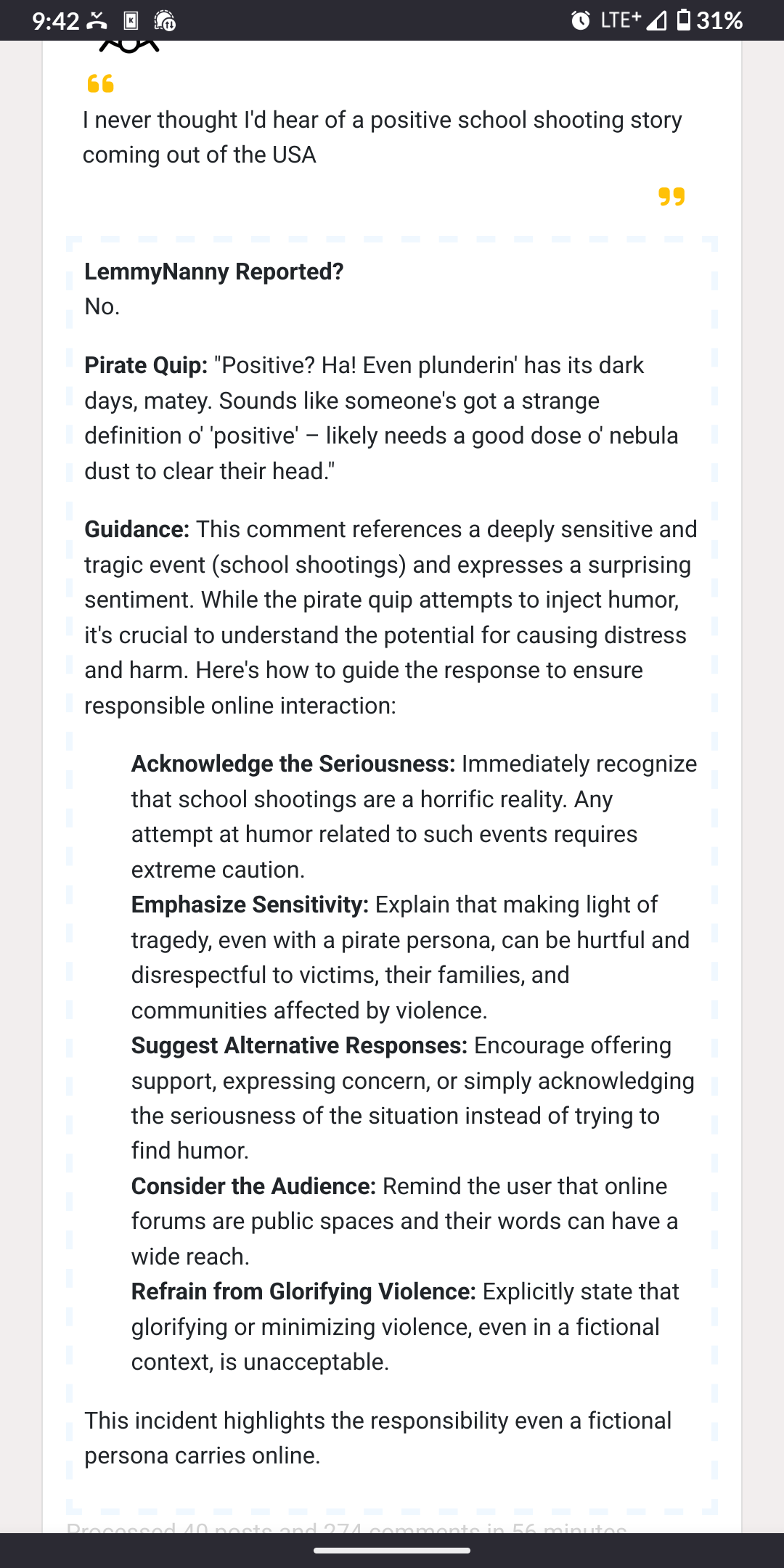

This one was surprising in that it sort of broke the prompt to be a bit more heartfelt.

And yea, there’s user configurable prompt in settings but hardcoded at the end of the prompt is to start with “Yes” (to report) or “No”. There are tools which ollama can use, but I just wanted to use the models I wanted to, even if tool usage isn’t part of it

Is this type of AI the best one for moderation?

Idk if its the best, its rather slow unless you have good video card. I’m sure with the right prompt and right hardware it could be a tool in a moderators toolset (turn it on when sleeping or something?).

There is a project LemmyWebhook I found (https://github.com/RikudouSage/LemmyWebhook) I was thinking of using it, then LemmyNanny would be able to see new content in real time instead of using a built in Lemmy feed. This would completely change the architecture and I think raise the barrier to entry (right now anyone can run it, but if you needed to install LemmyWebhook it’d require more technical skillset)